All data in your computer is stored using bits (binary digits) – the fundamental building blocks of digital information. This comprehensive tutorial explores how computers evolved from mechanical switches to modern microprocessors, all while maintaining the same basic principle of using only 0s and 1s to represent information.

From Decimal to Binary: Why Computers Use 0s and 1s

The Decimal System We Know

Humans naturally use the decimal system for mathematics, consisting of digits 0 through 9. The word “decimal” comes from “dec,” meaning 10. In decimal numbers, each position represents a power of 10:

Decimal place values: 100,000 | 10,000 | 1,000 | 100 | 10 | 1

Example: The number 3,256 contains:

- 3 thousands (3 × 1,000)

- 2 hundreds (2 × 100)

- 5 tens (5 × 10)

- 6 ones (6 × 1)

Why Binary Makes Sense for Computers

Early computing pioneers discovered that creating devices to work with all 10 decimal digits was extremely complex. They found it much simpler to design systems that only needed to distinguish between two states: ON (1) and OFF (0).

Evolution of Computer Hardware: Four Generations

Early Electro-Mechanical

Used relay switches where ON = 1 and OFF = 0

First Generation

Used vacuum tubes that could be switched on or off to represent binary values

Second Generation

Used transistors with source, sink, and gate controlling electrical flow

Third & Fourth Generation

Used integrated circuits and microprocessors with millions of tiny transistors

Understanding the Binary System

The binary system gets its name from “bi,” meaning 2. Unlike decimal’s base-10 system, binary uses base-2, with only two possible values: 0 and 1.

Bits and Bytes Explained

- Bit: Short for “binary digit” – a single 0 or 1

- Byte: A combination of 8 bits that can represent a number or character

Binary Place Values

In binary, each position represents a power of 2 (instead of 10):

Binary Example: Converting 01011010 to Decimal (90)

0 1 0 1 1 0 1 0

128 64 32 16 8 4 2 1

Calculation: 64 + 16 + 8 + 2 = 90

How Computers Represent Text: ASCII and Unicode

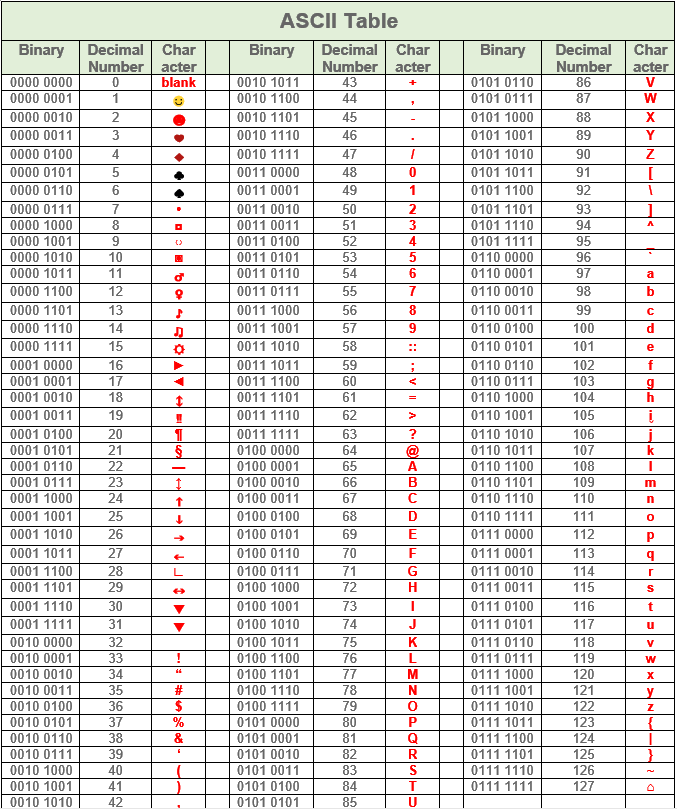

ASCII (American Standard Code for Information Interchange)

ASCII can represent 256 different characters using 8 bits per character (2⁸ = 256). The original version used characters 0-127, with an extended version adding 128 more characters.

Try it yourself: In applications like Excel or Notepad, turn on Num Lock, hold Alt, and type a decimal number on the numeric keypad:

- Alt + 65 = A (capital letter A)

- Alt + 1 = ☺ (smiley face)

Unicode: Supporting Global Languages

While ASCII worked well for English, other languages needed many more symbols. Unicode (Universal Code) solves this by using 4 bytes (32 bits) together, allowing for millions of different characters to support all world languages and symbols.

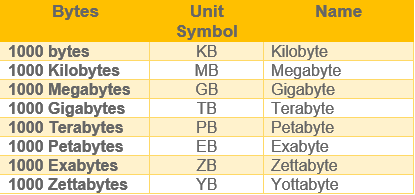

Data Storage Units: From Bits to Terabytes

Computer storage is measured using standardized units, though the actual values are based on powers of 2 rather than exact decimal amounts:

Important Note: Storage sizes aren’t exactly round numbers. For example:

- 1 Kilobyte = 1,024 characters (not 1,000)

- 64GB USB drive ≈ 64 billion characters

- 2TB hard drive ≈ 2 trillion characters

The Timeless Foundation of Computing

Despite incredible advances in computer speed and miniaturization, the fundamental principle remains unchanged: all computer data consists of 0s and 1s. Whether storing numbers, text, images, or videos, everything in your computer is ultimately represented by binary digits stored in transistors that are either ON (1) or OFF (0).

Modern computers have simply become extraordinarily fast at manipulating these binary digits and incredibly efficient at shrinking transistors to microscopic sizes, allowing billions of them to fit on a single chip.